Table of Contents

AWS

Service catalogue

Prescriptive-guidance

Prescriptive Guidance provides time-tested strategies, guides, and patterns to help accelerate your cloud migration, modernization, and optimization projects.

Library

- Build applications patterns: https://aws.amazon.com/builders-library/

- Infrastructure patterns: https://aws.amazon.com/architecture/well-architected/

Glossary

Regions and Availability-Zones

The Amazon Cloud Servers can be user in different Regions.

Each Region is separated in availability zones.

The Regions are quite separated from each other. The Availability Zones are more interconnected, they are chosen each time when an instance is launched. The Data can easily transfered inside one Availability-one but not inside one region.

Regions

US-East, US-West, EU-West, Asia-Pacific…

Availability zones

e.g.: eu-west-1a, eu-west-1b, eu-west-1c

VPC Virtual Private Cloud

Data Traffic pricing

| Traffic within “the same Availability Zone is free” - free | https://aws.amazon.com/ec2/pricing/on-demand/ |

| Traffic “across Availability Zones or VPC Peering connections in the same AWS Region is charged at $0.01/GB“ | https://aws.amazon.com/ec2/pricing/on-demand/ |

| Traffic “transferred across Inter-Region VPC Peering connections is charged at the standard inter-region data transfer rates.” ($0.01/GB - $0.02/GB) | https://aws.amazon.com/about-aws/whats-new/2017/11/announcing-support-for-inter-region-vpc-peering/ Prices, see “Data Transfer OUT From Amazon EC2 To” https://aws.amazon.com/ec2/pricing/on-demand/ |

Network segment

CIDR Masks do separate segments

Subnet calculator for CIDR blocks, which is able to calculate asymmetric blocks

AWS ACL (Access Control List)

The ACLs are stateless, so they don't remember the traffic, which left and do not automatically allow traffic back. (contrary to security-groups)

The Inbound ports - must be the ports, under which a service is running within the VPC-subnet. (like 22, 80 …)

- To allow Outbound traffic back from subnet to the client

- as port range you must NOT use the Service-Port-Range (22, 80, ..)

- Instead, to communicate from within the subnet back to client the “Ephemeral Port Range” is used

- which (“Ephemeral Port Range”) is chosen by the client-OS

See examples of port ranges by OS:

Compare Security Groups and ACLs:

AWS Command Line Interface

Commands Reference http://docs.aws.amazon.com/cli/latest/reference/ec2/index.html#cli-aws-ec2

VPC architecture

The architecture inside the VPC.

- connecting on premises and be able to scale?

- maintain resources in a shared VPC?

- Transit Gateway

https://www.youtube.com/watch?v=ar6sLmJ45xs&feature=youtu.be&t=1361

FIlters

There are predefined filters, which may be applied for several commands.

Filters DO NOT MATCH THE NAME OF THE PROPERTY! E.g. instance-id in filter and instanceId in JSON

| Command | List of FIlters |

|---|---|

| describe-instances | http://docs.aws.amazon.com/cli/latest/reference/ec2/describe-instances.html |

| describe-volumes | http://docs.aws.amazon.com/cli/latest/reference/ec2/describe-volumes.html |

# volume ids for given instance aws ec2 describe-volumes --filters Name=attachment.instance-id,Values='i-44abd0cc' --query Volumes[].VolumeId --output text # root devices name for given instance aws ec2 describe-instances --filters Name='instance-id',Values='i-44abd0cc' --query 'Reservations[0].Instances[0].RootDeviceName'

Query

http://docs.aws.amazon.com/cli/latest/userguide/controlling-output.html#controlling-output-filter

Specific tag filtering

--query 'Reservations[0].Instances[0].Tags[?Key==`Name`].Value'

Automatic Backups

Existing solution

Here is an instance which can be executed and contains an existing Asigra solution https://aws.amazon.com/marketplace/pp/B01FWRFHLM?qid=1478774150478&sr=0-2&ref_=srh_res_product_title

Own Script

The AWS CLI is required for this script. How to configure it: http://docs.aws.amazon.com/cli/latest/userguide/cli-chap-getting-started.html

Script to make automatic snapshots and clean up:

#!/bin/bash

export PATH=$PATH:/usr/local/bin/:/usr/bin

# Safety feature: exit script if error is returned, or if variables not set.

# Exit if a pipeline results in an error.

set -ue

set -o pipefail

## Automatic EBS Volume Snapshot Creation & Clean-Up Script

#

# Written by Casey Labs Inc. (https://www.caseylabs.com)

# Contact us for all your Amazon Web Services Consulting needs!

# Script Github repo: https://github.com/CaseyLabs/aws-ec2-ebs-automatic-snapshot-bash

#

# Additonal credits: Log function by Alan Franzoni; Pre-req check by Colin Johnson

#

# PURPOSE: This Bash script can be used to take automatic snapshots of your Linux EC2 instance. Script process:

# - Determine the instance ID of the EC2 server on which the script runs

# - Gather a list of all volume IDs attached to that instance

# - Take a snapshot of each attached volume

# - The script will then delete all associated snapshots taken by the script that are older than 7 days

#

# DISCLAIMER: This script deletes snapshots (though only the ones that it creates).

# Make sure that you understand how the script works. No responsibility accepted in event of accidental data loss.

#

## Variable Declartions ##

# Get Instance Details

instance_id=$(wget -q -O- http://169.254.169.254/latest/meta-data/instance-id)

region=$(wget -q -O- http://169.254.169.254/latest/meta-data/placement/availability-zone | sed -e 's/\([1-9]\).$/\1/g')

# Set Logging Options

logfile="/var/log/ebs-snapshot.log"

logfile_max_lines="5000"

# How many days do you wish to retain backups for? Default: 7 days

retention_days="7"

retention_date_in_seconds=$(date +%s --date "$retention_days days ago")

## Function Declarations ##

# Function: Setup logfile and redirect stdout/stderr.

log_setup() {

# Check if logfile exists and is writable.

( [ -e "$logfile" ] || touch "$logfile" ) && [ ! -w "$logfile" ] && echo "ERROR: Cannot write to $logfile. Check permissions or sudo access." && exit 1

tmplog=$(tail -n $logfile_max_lines $logfile 2>/dev/null) && echo "${tmplog}" > $logfile

exec > >(tee -a $logfile)

exec 2>&1

}

# Function: Log an event.

log() {

echo "[$(date +"%Y-%m-%d"+"%T")]: $*"

}

# Function: Confirm that the AWS CLI and related tools are installed.

prerequisite_check() {

for prerequisite in aws wget; do

hash $prerequisite &> /dev/null

if [[ $? == 1 ]]; then

echo "In order to use this script, the executable \"$prerequisite\" must be installed." 1>&2; exit 70

fi

done

}

# Function: Snapshot all volumes attached to this instance.

snapshot_volumes() {

for volume_id in $volume_list; do

log "Volume ID is $volume_id"

# Get the attched device name to add to the description so we can easily tell which volume this is.

device_name=$(aws ec2 describe-volumes --region $region --output=text --volume-ids $volume_id --query 'Volumes[0].{Devices:Attachments[0].Device}')

# Take a snapshot of the current volume, and capture the resulting snapshot ID

snapshot_description="$(hostname)-$device_name-backup-$(date +%Y-%m-%d)"

snapshot_id=$(aws ec2 create-snapshot --region $region --output=text --description $snapshot_description --volume-id $volume_id --query SnapshotId)

log "New snapshot is $snapshot_id"

# Add a "CreatedBy:AutomatedBackup" tag to the resulting snapshot.

# Why? Because we only want to purge snapshots taken by the script later, and not delete snapshots manually taken.

aws ec2 create-tags --region $region --resource $snapshot_id --tags Key=CreatedBy,Value=AutomatedBackup

done

}

# Function: Cleanup all snapshots associated with this instance that are older than $retention_days

cleanup_snapshots() {

for volume_id in $volume_list; do

snapshot_list=$(aws ec2 describe-snapshots --region $region --output=text --filters "Name=volume-id,Values=$volume_id" "Name=tag:CreatedBy,Values=AutomatedBackup" --query Snapshots[].SnapshotId)

for snapshot in $snapshot_list; do

log "Checking $snapshot..."

# Check age of snapshot

snapshot_date=$(aws ec2 describe-snapshots --region $region --output=text --snapshot-ids $snapshot --query Snapshots[].StartTime | awk -F "T" '{printf "%s\n", $1}')

snapshot_date_in_seconds=$(date "--date=$snapshot_date" +%s)

snapshot_description=$(aws ec2 describe-snapshots --snapshot-id $snapshot --region $region --query Snapshots[].Description)

if (( $snapshot_date_in_seconds <= $retention_date_in_seconds )); then

log "DELETING snapshot $snapshot. Description: $snapshot_description ..."

aws ec2 delete-snapshot --region $region --snapshot-id $snapshot

else

log "Not deleting snapshot $snapshot. Description: $snapshot_description ..."

fi

done

done

}

## SCRIPT COMMANDS ##

log_setup

prerequisite_check

# Grab all volume IDs attached to this instance

volume_list=$(aws ec2 describe-volumes --region $region --filters Name=attachment.instance-id,Values=$instance_id --query Volumes[].VolumeId --output text)

snapshot_volumes

cleanup_snapshots

The script an be used in crontab as following:

# Minute Hour Day of Month Month Day of Week Command # makes SHORT term backups every day. Deletes short term snapshots older than 7 days. 5 2 * * * /home/username/backupscript.sh 7 shortterm # makes LONG term backups every Saturday night days. Deletes long term snapshots older than 30 days 30 2 * * 6 /home/username/backupscript.sh 30 longterm

IAM Domains and Supporting Services

Which services do you need for identity management? https://www2.deloitte.com/content/dam/Deloitte/us/Documents/risk/us-cloud-and-identity-and-access-management.pdf

- Identification

- Authentification Authorization

- Access GOvernance

- Accountability

Policies

Via policies, assinged to Groups, assigned to Users one can configure which resources are reachable for some particular user.

Write access to a S3 bucket

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:ListBucketMultipartUploads",

"s3:AbortMultipartUpload",

"s3:DeleteObject",

"s3:DeleteObjectVersion",

"s3:GetObject",

"s3:GetObjectAcl",

"s3:GetObjectVersion",

"s3:GetObjectVersionAcl",

"s3:PutObject",

"s3:PutObjectAcl",

"s3:PutObjectAclVersion"

],

"Resource": [

"arn:aws:s3:::aws-migration-landingzone/toaws/artifacts/*",

"arn:aws:s3:::aws-migration-landingzone/toaws/svn/*",

"arn:aws:s3:::aws-migration-landingzone/fromaws/artifacts/*",

"arn:aws:s3:::aws-migration-landingzone/fromaws/svn/*"

]

}

]

}

Route tables

Route tables are defining rules for how traffic leaves the subnet.

Traffic destined for the Destination will be sent to the Target.

Lambdas

Configuring functions: https://docs.aws.amazon.com/lambda/latest/dg/configuration-function-common.html

Python

Use the python API boto3. From boto3 Use the client API, dont use the resource API.

Here is the documentation for the available services: http://boto3.readthedocs.io/en/latest/reference/services/index.html

Java - custom runtime

Build a custom Java runtime for AWS Lambda

https://aws.amazon.com/de/blogs/compute/build-a-custom-java-runtime-for-aws-lambda/

Organisations

SCP

THe policies are applied on an organizational level.

This is an example, how all services, but sts, s3, iam are denied

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowSTS",

"Effect": "Deny",

"NotAction": [

"sts:*",

"s3:*",

"iam:*"

],

"Resource": "*"

}

]

}

This is how policies are evaluated. https://docs.aws.amazon.com/IAM/latest/UserGuide/reference_policies_evaluation-logic.html

CloudFormation

Debugging can be done, via following the logs:

Connect via SSH to the instance, which is currently modified and follow the logs:

tail -f /var/logs/cloud-inig-output.log

ELB

For experimenting with ELB use the following script, when provisioning EC2 instances, to deploy a webserver with a simple index.html

It will have a default site with the name of the instance in it.

#!/bin/bash

yum update -y

yum install -y httpd24 php56 mysql55-server php56-mysqlnd

service httpd start

chkconfig httpd on

groupadd www

usermod -a -G www ec2-user

chown -R root:www /var/www

chmod 2775 /var/www

find /var/www -type d -exec chmod 2775 {} +

find /var/www -type f -exec chmod 0664 {} +

echo "<?php phpinfo(); ?>" > /var/www/html/phpinfo.php

uname -n > /var/www/html/index.html

STS

You can give users a valid STS-token using this service. The users must authenticate themselves against something else, like corporate app. Then they can use the token to identify themselves against AWS apps. You can then programmatically check, whether the STS token is valid.

Details: https://www.slideshare.net/cloudITbetter/aws-security-token-service

S3

Guard Duty

Shield

AWS Shield protects the OSI model’s infrastructure layers (Layer 3 Network, Layer 4 Transport)

AWS Shield is a managed Distributed Denial of Service (DDoS) protection service, whereas AWS WAF is an application-layer firewall that controls access via Web ACL’s.

Shield “Simple” - AWS reacts on DDoS attacks

Shield “Advanced” - AWS reacts on DDoS attacks and provides a 24×7 team and reports.

WAF Web Application Firewall

See https://medium.com/@comp87/deep-dive-into-the-aws-web-application-firewall-waf-14148ea0d3d

Network firewalls operate at Layer 3 (Network) and only understand the

- source IP Address,

- port, and

- protocol.

AWS Security Groups are a great example of this.

WAF works on OSI layer 7 (Application)

means it understands higher-level protocols such as an

- HTTP(S) request, including its

- headers,

- body,

- method, and

- URL

- WAF interacts with

- CloudFront distributions,

- application load balancers,

- AppSync GraphQL,

- APIs and

- API Gateway REST APIs.

A WAF can be configured to detect traffic from the following:

- specific IPs;

- IP ranges or country of origin;

- content patterns in request bodies, headers and cookies;

- SQL injection attacks;

- cross-site scripting; and

- IPs exceeding rate-based rules

When incoming traffic matches any of the configured rules, WAF can reject requests, return custom responses or simply create metrics to monitor applicable requests.

AWS API Gateway

Authentication and Authorization

SSH via Ec2 instance connect

One can ssh to the instance via the AWS instance connect feature. This allows to push an own Amazon agent to the target machine using the permission “ec2-instance-connect:SendSSHPublicKey”

To set it up read: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-instance-connect-set-up.html

After that one can ssh to the machine.

SSH Tunneling

ssh ec2-user@i-12342f8f3a2bc6367 -i ~/.ssh/ec2key.priv.openssh.ppk -fNTC -L 0.0.0.0:4440:localhost:4440

SSH connect

ssh ec2-user@i-09452f8f3a2bc6367 -i ~/.ssh/ec2key.priv.openssh.ppk

Boto3

generate a signed URL

Use the script to generate a signed URL

import argparse

import logging

import boto3

from botocore.exceptions import ClientError

import requests

import sys

logger = logging.getLogger(__name__)

logging.basicConfig(stream=sys.stdout, level=logging.INFO)

def generate():

s3 = boto3.client('s3')

bucket_name='poc-arc-dev-signedurl'

object_key = "test2.txt"

response = s3.generate_presigned_post(

Bucket=bucket_name,

Key=object_key,

)

logger.info("Got presigned POST URL: %s", response['url'])

# formulate a CURL command now

response['fields']['file'] = '@{key}'.format(key=object_key)

form_values = "\n ".join(["-F {key}={value} \\".format(key=key, value=value)

for key, value in response['fields'].items()])

print('curl command: \n')

print('curl -v {form_values} \n {url}'.format(form_values=form_values, url=response['url']))

print('-'*88)

if __name__ == '__main__':

generate()

CloudWatch agent

The agent to send logs to cloudwatch.

Done on Ubuntu 22

Give the ec2 role of that machine the ability to access cloudwatch and ec2 services, so that it can read ec2 metadata.

{

"Action": [

"cloudwatch:Put*",

"logs:Create*",

"logs:Put*",

"logs:Describe*",

"ec2:Describe*",

"ec2:Read*",

"ec2:List*"

],

"Effect": "Allow",

"Resource": "*"

}

Config files:

file: “amazon-cloudwatch-agent.json”

Commands to run as root, to get permissions to access log files.

{

"agent" : {

"metrics_collection_interval": 60,

"run_as_user": "root"

},

"logs": {

"logs_collected": {

"files": {

"collect_list": [

{

"file_path": "/opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log",

"log_group_name": "alf-dev-webserv2-ec2instance",

"log_stream_name": "amazon-cloudwatch-agent.log",

"timezone": "UTC"

},

{

"file_path": "/var/log/syslog",

"log_group_name": "alf-dev-webserv2-ec2instance",

"log_stream_name": "syslog",

"timezone":"UTC"

}

]

}

},

"force_flush_interval" : 5

},

"metrics": {

"append_dimensions": {

"AutoScalingGroupName": "${aws:AutoScalingGroupName}",

"ImageId": "${aws:ImageId}",

"InstanceId": "${aws:InstanceId}",

"InstanceType": "${aws:InstanceType}"

},

"metrics_collected": {

"disk": {

"measurement": [

"used_percent"

],

"metrics_collection_interval": 60,

"resources": [

"*"

]

},

"mem": {

"measurement": [

"mem_used_percent"

],

"metrics_collection_interval": 60

}

}

}

}

The ansible playbook to install the agent

file: “configCloudWatchAgent.ansible.yaml”

---

# Configuring syslog rotation

- hosts: "{{ host }}"

become: yes

become_method: sudo

become_user: root

tasks:

- name: Download awslogs-agent installer

get_url:

url: https://amazoncloudwatch-agent.s3.amazonaws.com/ubuntu/amd64/latest/amazon-cloudwatch-agent.deb

dest: /tmp/amazon-cloudwatch-agent.deb

owner: "root"

group: "root"

force: yes

mode: 0777

- name: Install deb

apt:

deb: /tmp/amazon-cloudwatch-agent.deb

become: true

register: result

- debug:

msg: "{{ result }}"

- name: Remove the /tmp/amazon-cloudwatch-agent.deb

file:

path: /tmp/amazon-cloudwatch-agent.deb

state: absent

- name: awslogs config folder

file:

path: /var/awslogs/etc/

state: directory

mode: "u=rwx,g=rwx,o=rwx"

owner: root

group: root

recurse: yes

- name: Copy a "amazon-cloudwatch-agent.json" file on the remote machine for editing. Overwrite if exists

copy:

src: "{{ playbook_dir }}/../config/amazon-cloudwatch-agent.json"

dest: /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.json

mode: "u=rwx,g=r,o=r"

remote_src: false

- name: now fetch the config and run agent

ansible.builtin.shell:

cmd: amazon-cloudwatch-agent-ctl -s -a fetch-config -c file:/opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.json

become: true

Check status of service, should be “active (running)”

sudo systemctl status amazon-cloudwatch-agent.service

Check status of agent via ctl tool

$ amazon-cloudwatch-agent-ctl -a status

{

"status": "running",

"starttime": "2023-09-12T09:09:08+00:00",

"configstatus": "configured",

"version": "1.300026.3b189"

}

The consolidated configurations which the system collects from above manual config files one can check via:

$ cat /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.yaml

connectors: {}

exporters:

awscloudwatch:

force_flush_interval: 1m0s

max_datums_per_call: 1000

max_values_per_datum: 150

namespace: CWAgent

region: eu-central-1

resource_to_telemetry_conversion:

enabled: true

extensions: {}

processors:

ec2tagger:

ec2_instance_tag_keys:

- AutoScalingGroupName

ec2_metadata_tags:

- ImageId

- InstanceId

- InstanceType

refresh_interval_seconds: 0s

receivers:

telegraf_disk:

collection_interval: 1m0s

initial_delay: 1s

telegraf_mem:

collection_interval: 1m0s

initial_delay: 1s

service:

extensions: []

pipelines:

metrics/host:

exporters:

- awscloudwatch

processors:

- ec2tagger

receivers:

- telegraf_mem

- telegraf_disk

telemetry:

logs:

development: false

disable_caller: false

disable_stacktrace: false

encoding: console

error_output_paths: []

initial_fields: {}

level: info

output_paths:

- /opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log

Especially the agent logs are in:

$ cat /opt/aws/amazon-cloudwatch-agent/logs/amazon-cloudwatch-agent.log

Backup strategies

Which patterns for high availability are available?

How much do they cost?

Migration

Prescriptive guideline describes pretty well how to organize it:

Domains

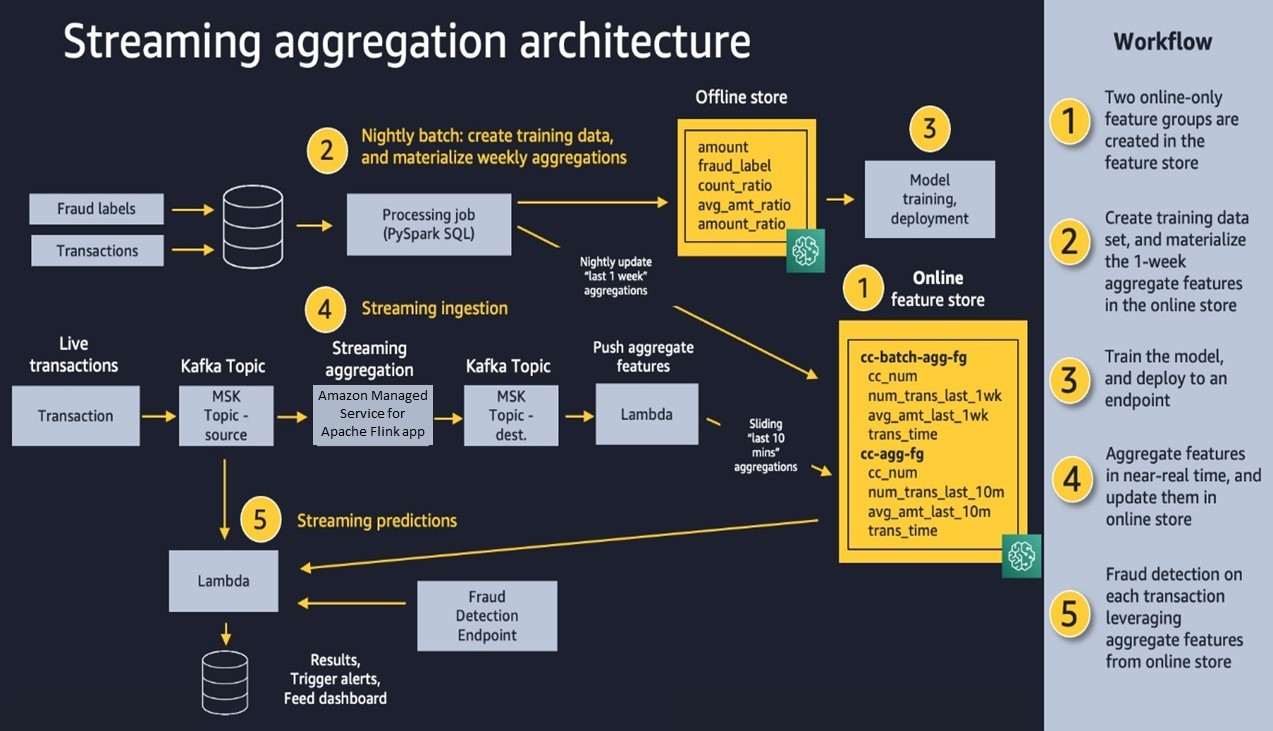

Finance and credit card

Real time credit card fraud evaluation:

Goldman Sachs

Achieving cross-regional availibility